CaPS: Communication and Presentation Skills Program

Case Study Client Project

How might we support students’ presentation skill development for academic and career goals?

Name:

Srishty Bhavsar

Role

UX Researcher, Case Study Designer

Duration of Program

2 years

Class

UX Design Client Studio - New York University

PROBLEM SPACE

ECT students are expected to communicate complex ideas effectively within their university classes and workplaces, yet many lack structured opportunities to practice and improve their presentation skills.

Solution

CaPS provides a voluntary, self-directed, research-informed program that helps ECT students build their presentation and communication skills through structured modules, peer feedback, and evolving AI-supported tools.

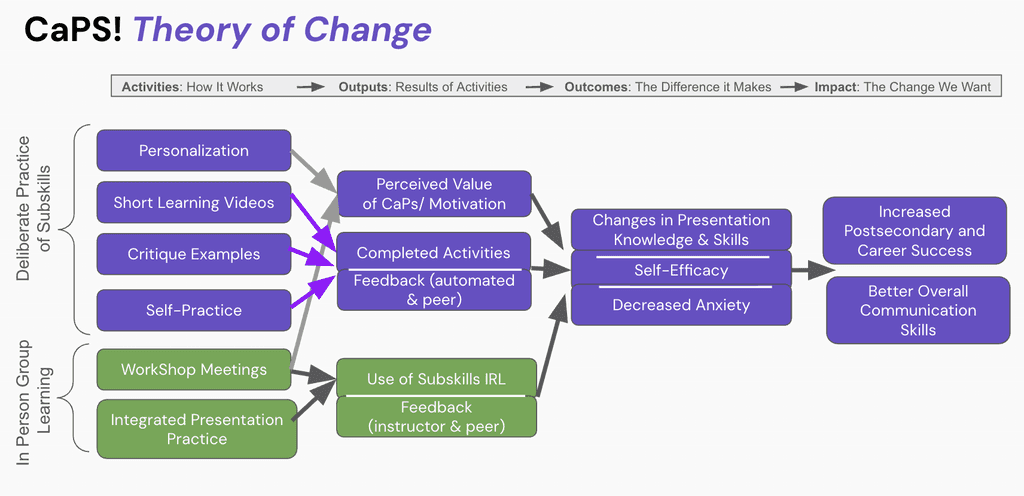

Guiding Theories

Deliberate Practice: Complex skills improve through focused, repeated practice on specific sub-skills with meaningful feedback.

Self-Regulation Theory: Learners grow by setting goals, monitoring progress, and reflecting on their learning strategies.

Self-Determination Theory: Motivation increases when learners experience autonomy, competence, and supportive social connections.

Background

Although the program benefits many students, CaPS remains a voluntary experience and is not a formal requirement in any ECT curriculum preserving its independence as both a student-learning initiative and an active research project.

Origins of the CaPS Program

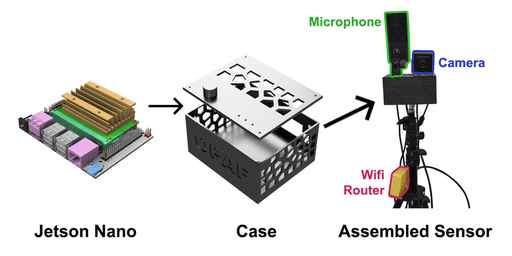

The idea for CaPS emerged indirectly from an earlier project by Professor Xavier Ochoa, who developed an AI companion capable of analyzing vocal delivery and body language to provide automated feedback on presentation performance.

Components of "OpenOPAF" Tool by Professor Xavier Ochoa

Challenges in Academic Adoption

Introducing a new AI driven product into a university curriculum comes with significant barriers.

Faculty bandwidth constraints: Instructors have limited time to adopt or manage new tools.

Institutional caution around AI: Without sufficient research evidence, integrating AI into coursework is viewed as a substantial curricular change.

Scalability concerns: Adoption across courses and departments seemed unlikely in the short term.

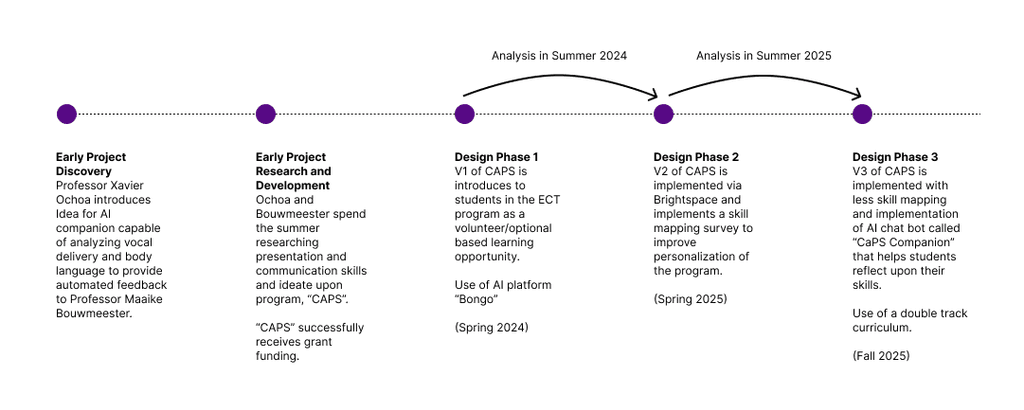

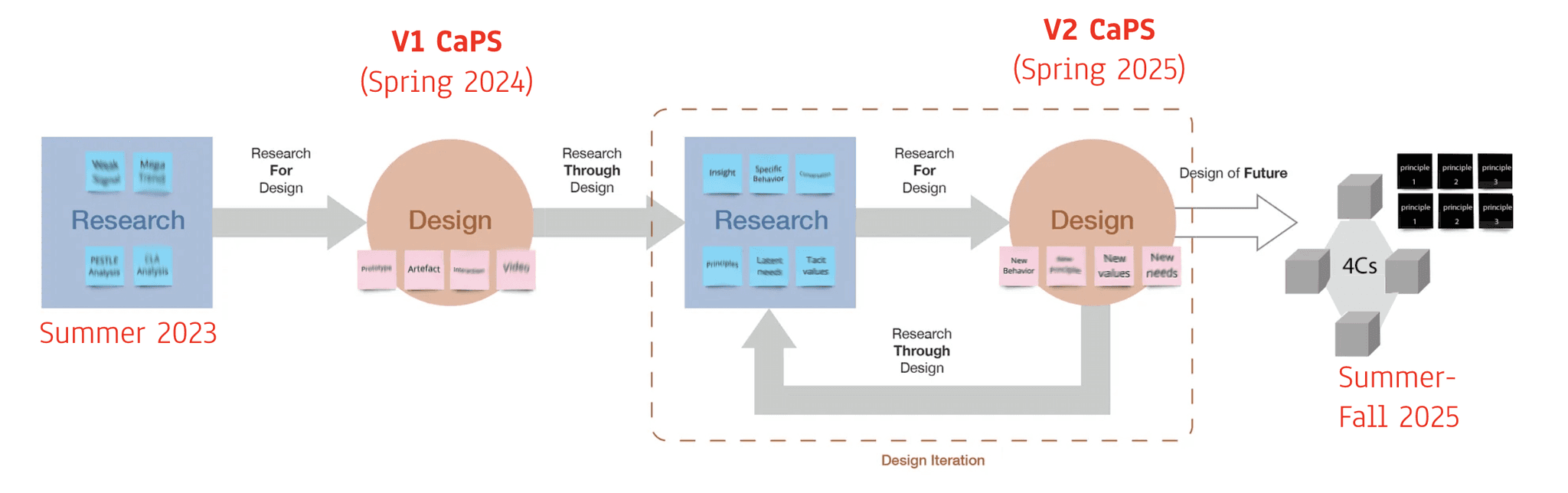

Program timeline

The goal of 4C's

This project timeline provided by the CAPS 2025 presentation explains the team's process through a design based research approach. Ultimately CAPS wants to students to develop the 4C's of Critical Thinking, Communication, Collaboration, and Creativity within version 3 of the program.

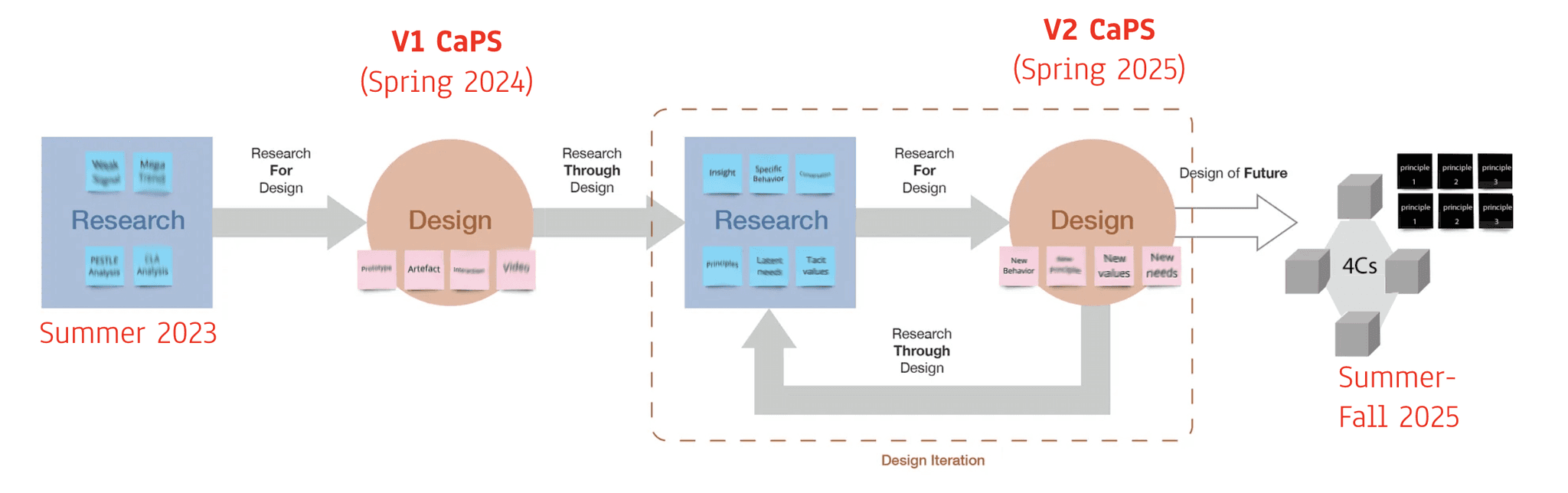

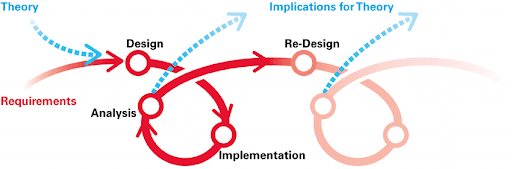

Learning Design Models

This learning model highlights design based research as well but additionally represents how the CAPS program embeds the learning theories of deliberate practice, self regulation, and self determination into its redesign process. In addition to using the theories, the CAPS program serves to test the theories through each version iteration and inform new implications for the theories through student usability insights and impact.

Team and roles

Xavier Ochoa, PhD

Director, Augment-Ed

Maaike Bouwmeester, PhD

ECT Clinical Asst Professor

Project Lead

Design & Research Roles

Even though roles are fluid, responsibilities roughly include:

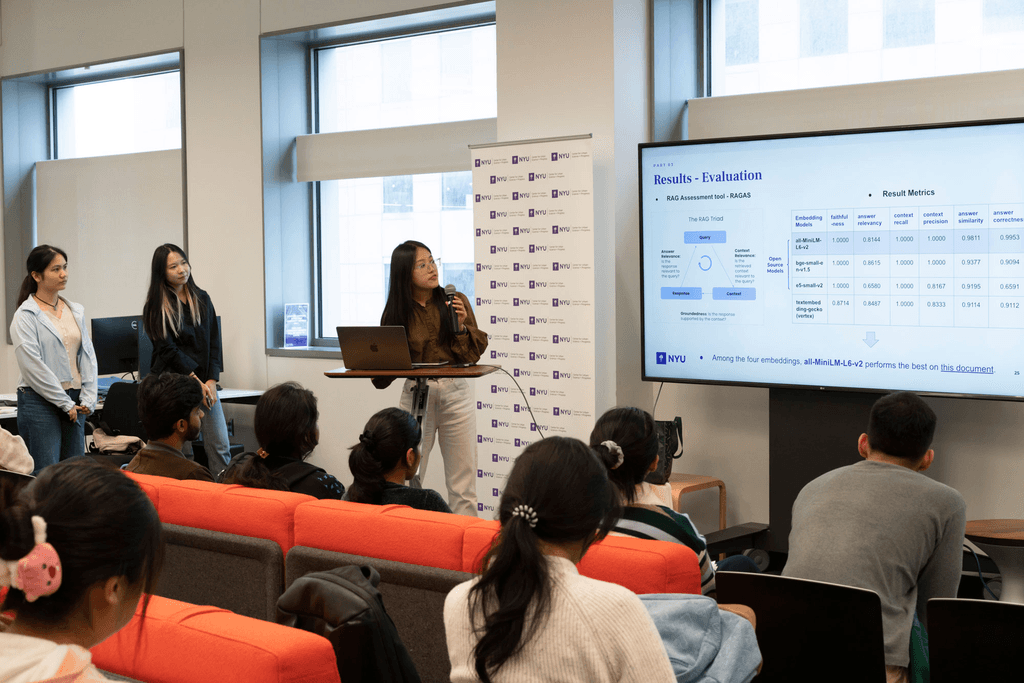

Founding Alumni of ECT Program (Elaine Li & Stephanie)

Design & Usability Team (Ziyun (Zyla) Cheng, Yinuo Ma)

Research & Facilitation Team (Eden Lee, Chloe Huang, Lingzhi (Lexus) Guan, Zulsyika Nurfaizah, Xixi Tian)

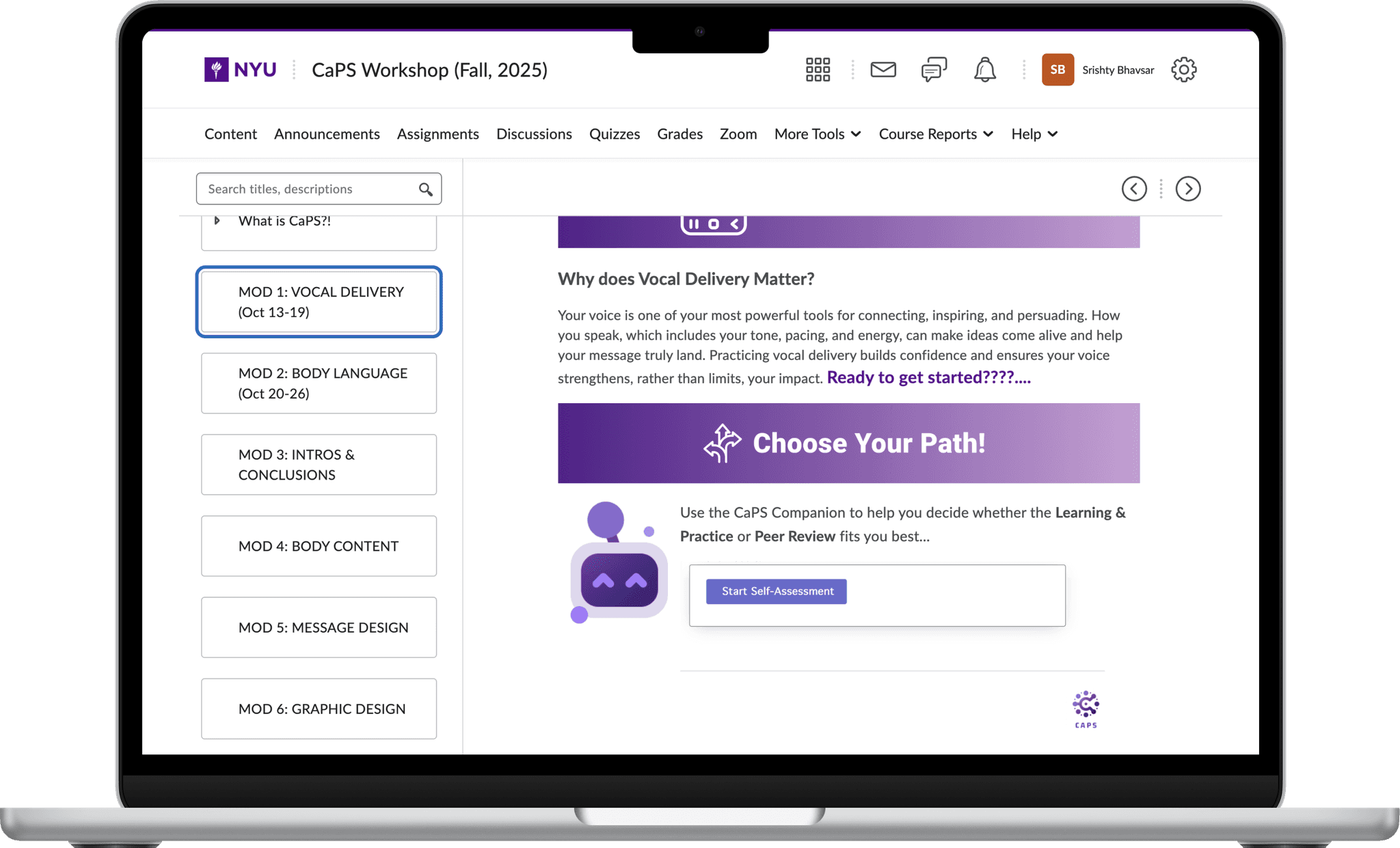

Design Process

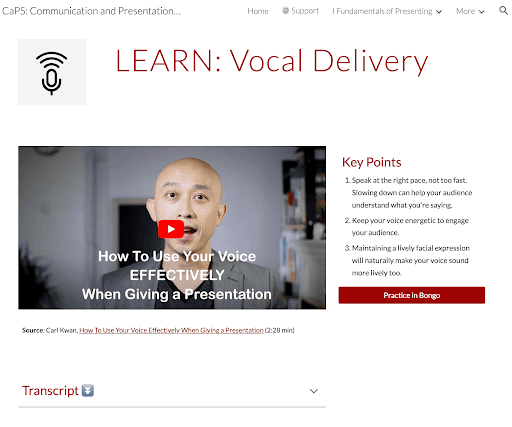

The CAPS program interface is currently designed around brightspace as it is a familiar platform for NYU students, can easily display module based courses, and allows AI software to be intergrated with its content.

Design Phase 1 (v1)

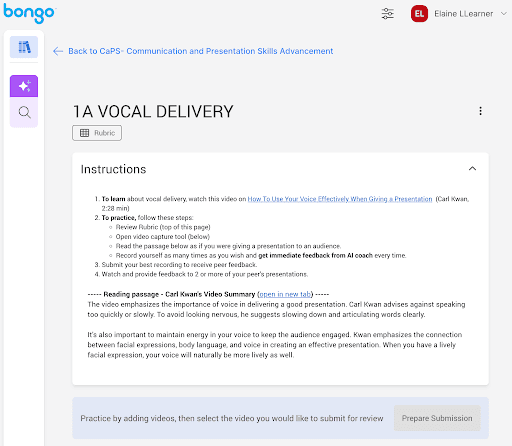

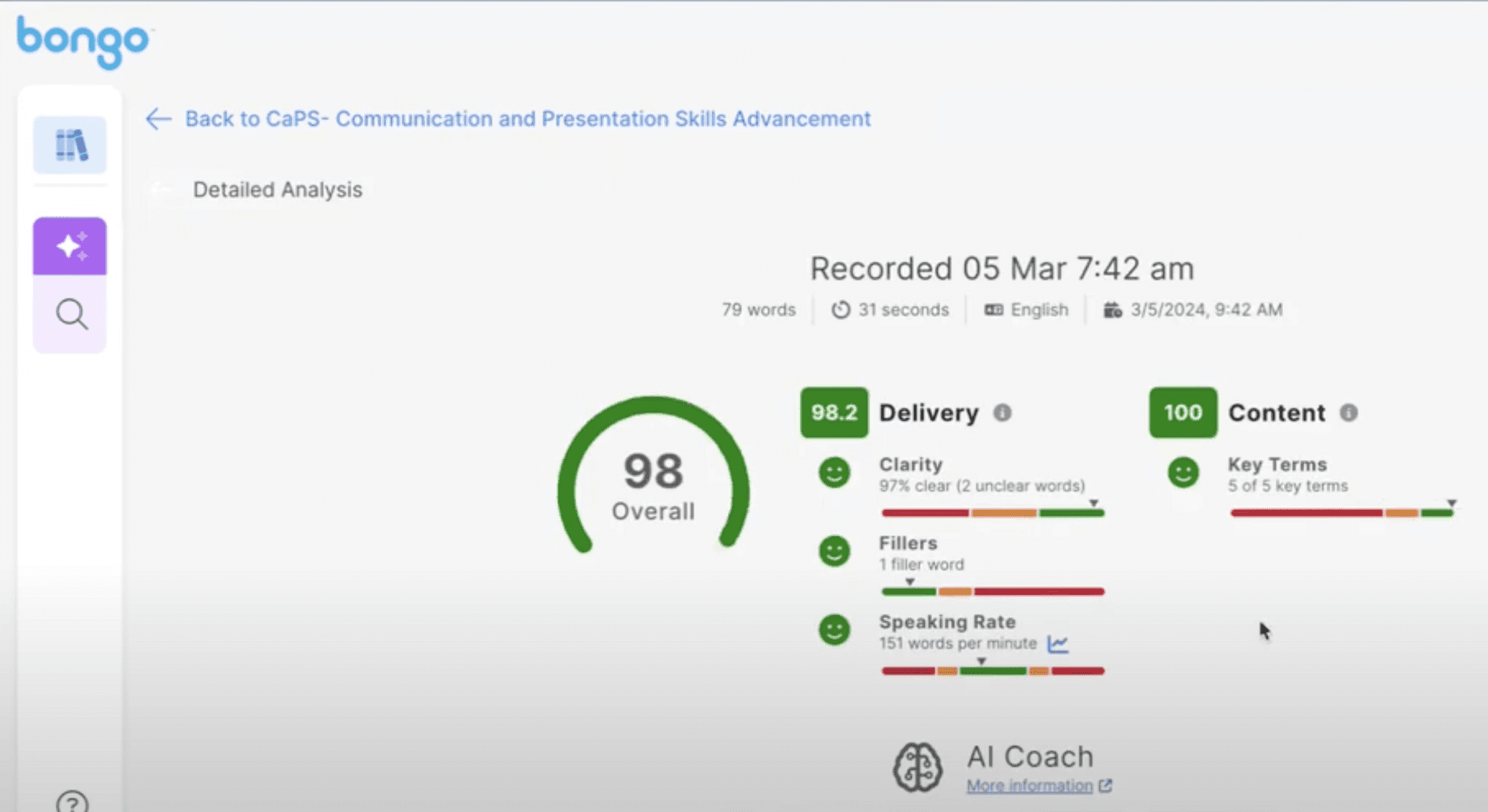

Phase 1 introduced the first version of CaPS as a standalone website that partnered with a startup company called "Bongo." At the time, the use of AI was new in the education space and NYU was not confident about the saftey of integrating AI into brightspace (the official platform for NYU courses).

The program focused on two main areas: presentation elements and stylistic elements. This version served as an initial proof of concept funded by a design grant, allowing the team to test the core idea with a small pilot cohort.

Bongo’s early AI video-feedback tool was meant to help students practice presentation skills and received detailed feedback on their presentation skills through a video capture submission.

Design Phase 2 (v2)

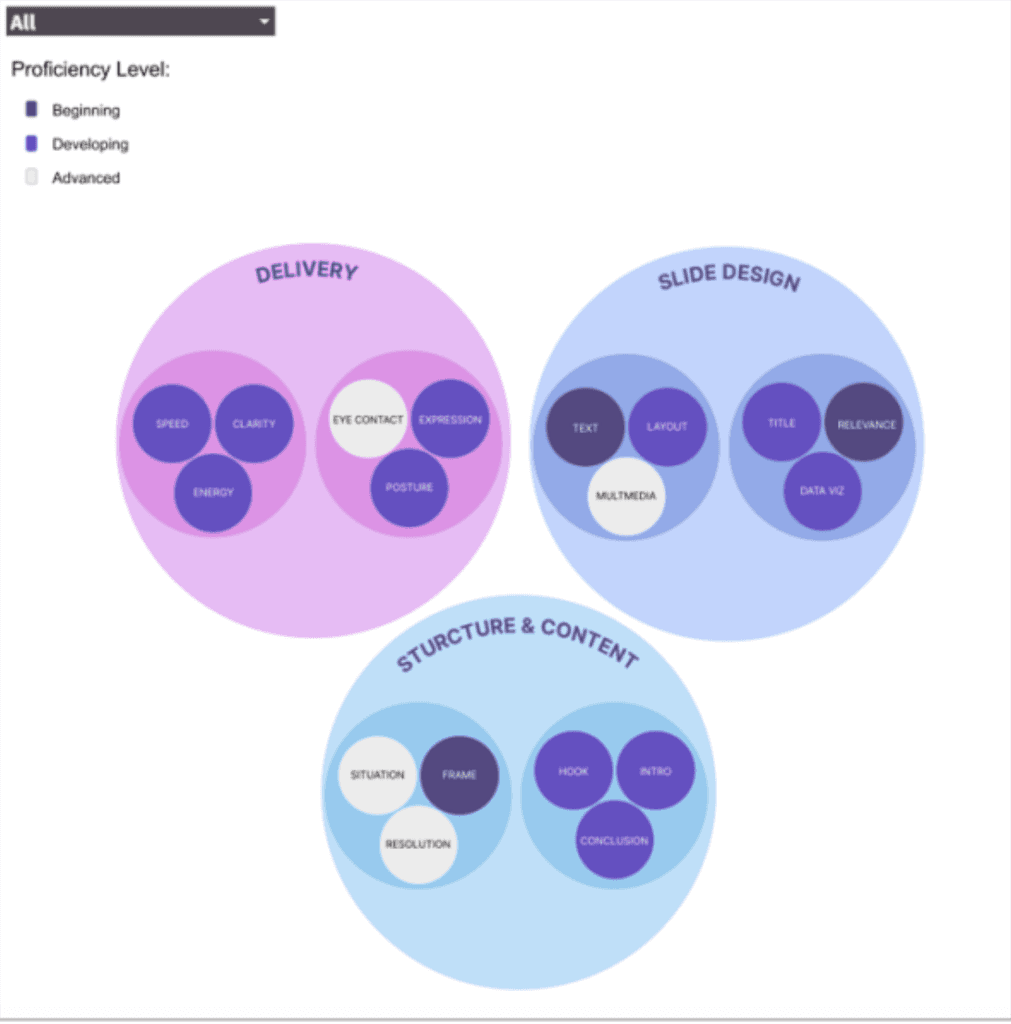

In Phase 2, CaPS moved fully onto Brightspace and introduced a more structured, personalized experience through a baseline skills survey and a new skills-mapping system that marked the skills into "Delivery," "Structure and Content," and "Slide Design."

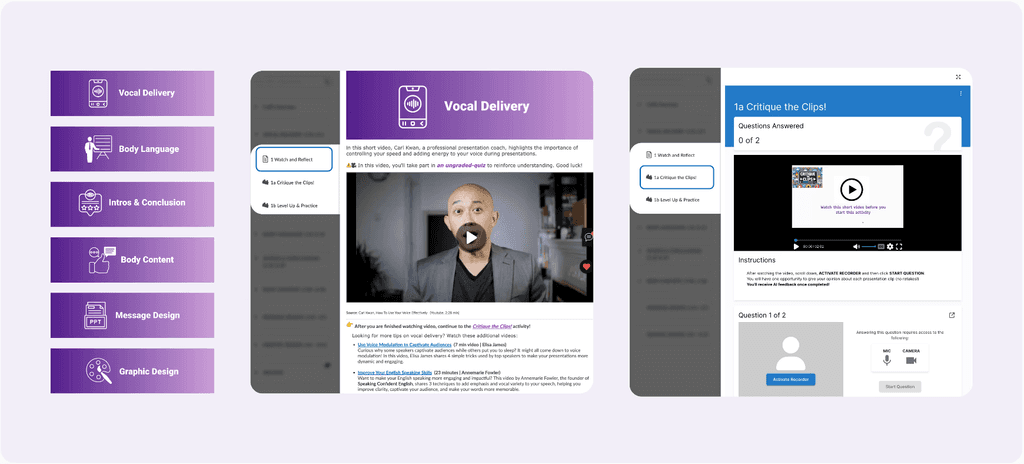

The program expanded into six bright space standalone modules and incorporated peer reviews, rubrics, and new live workshops, creating a more cohesive, user-friendly, and community-oriented version of the program. Bongo was still integrated with this version as the brightspace hosting of the program allowed for AI enhanced coaching and generated feedback directly within the platform.

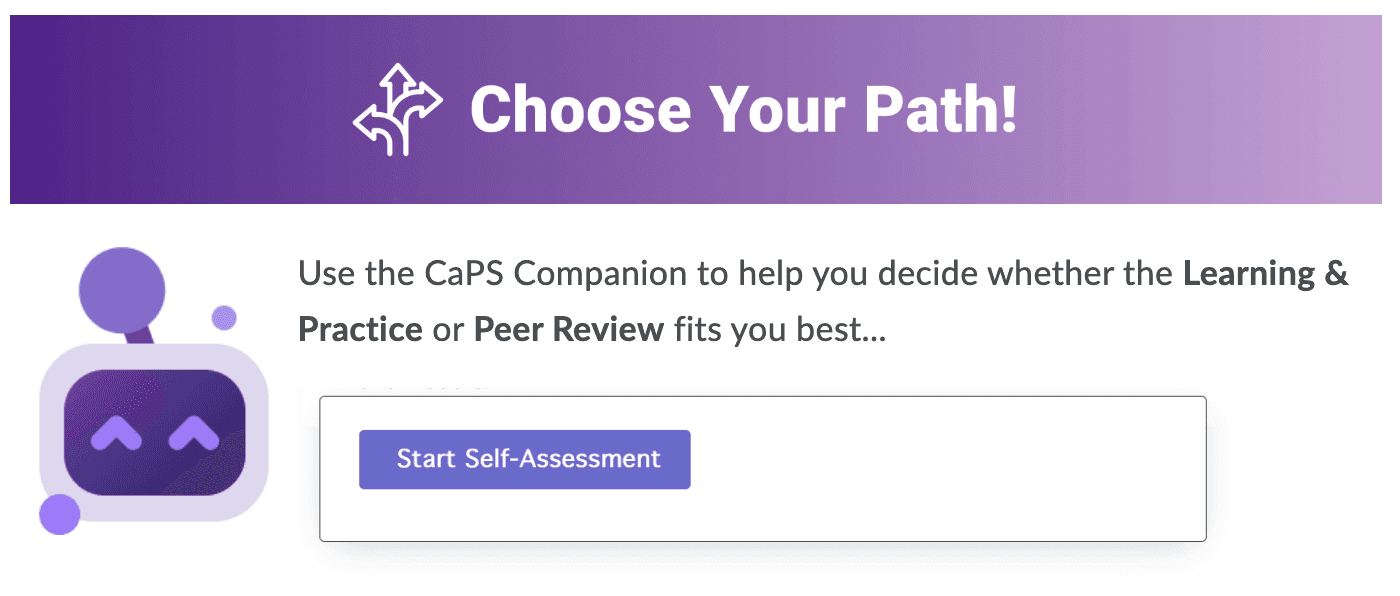

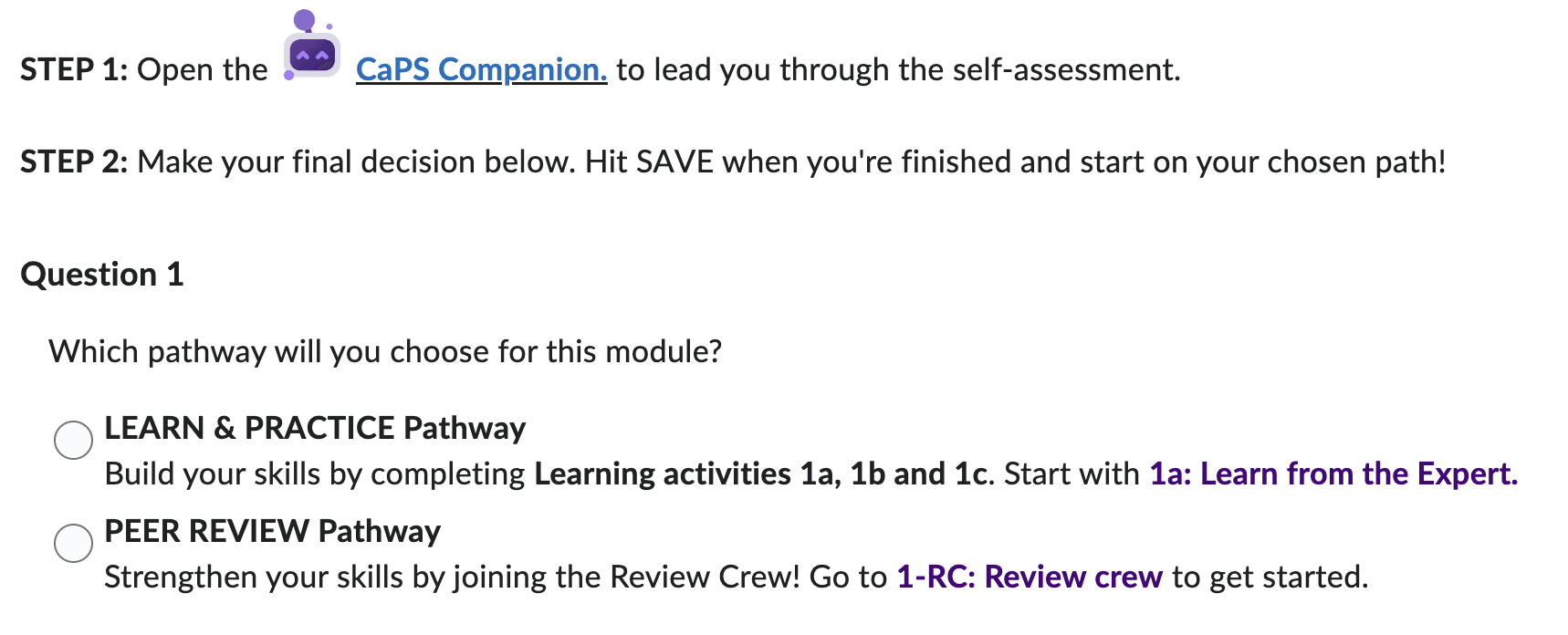

Design Phase 3 (v3)

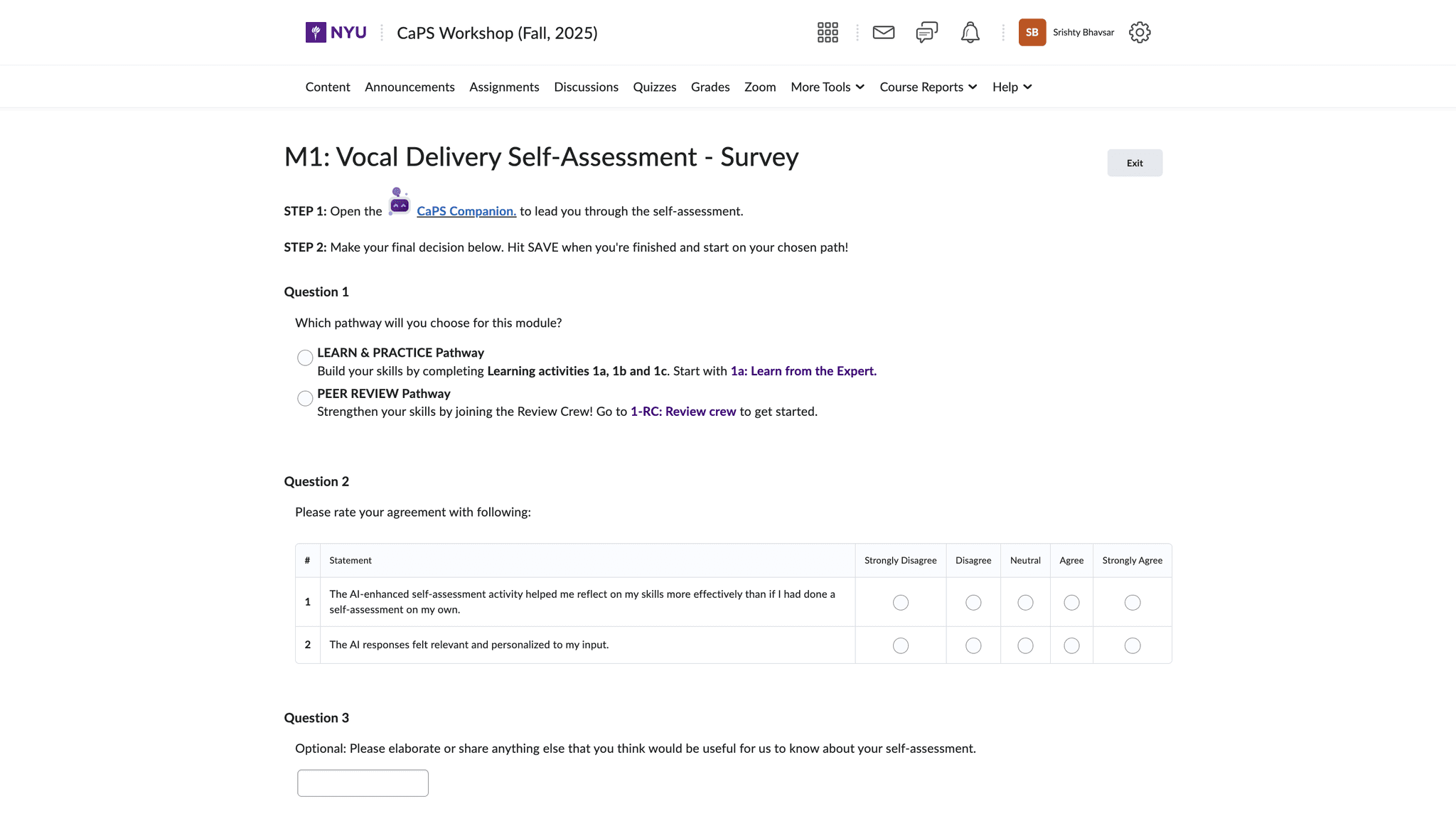

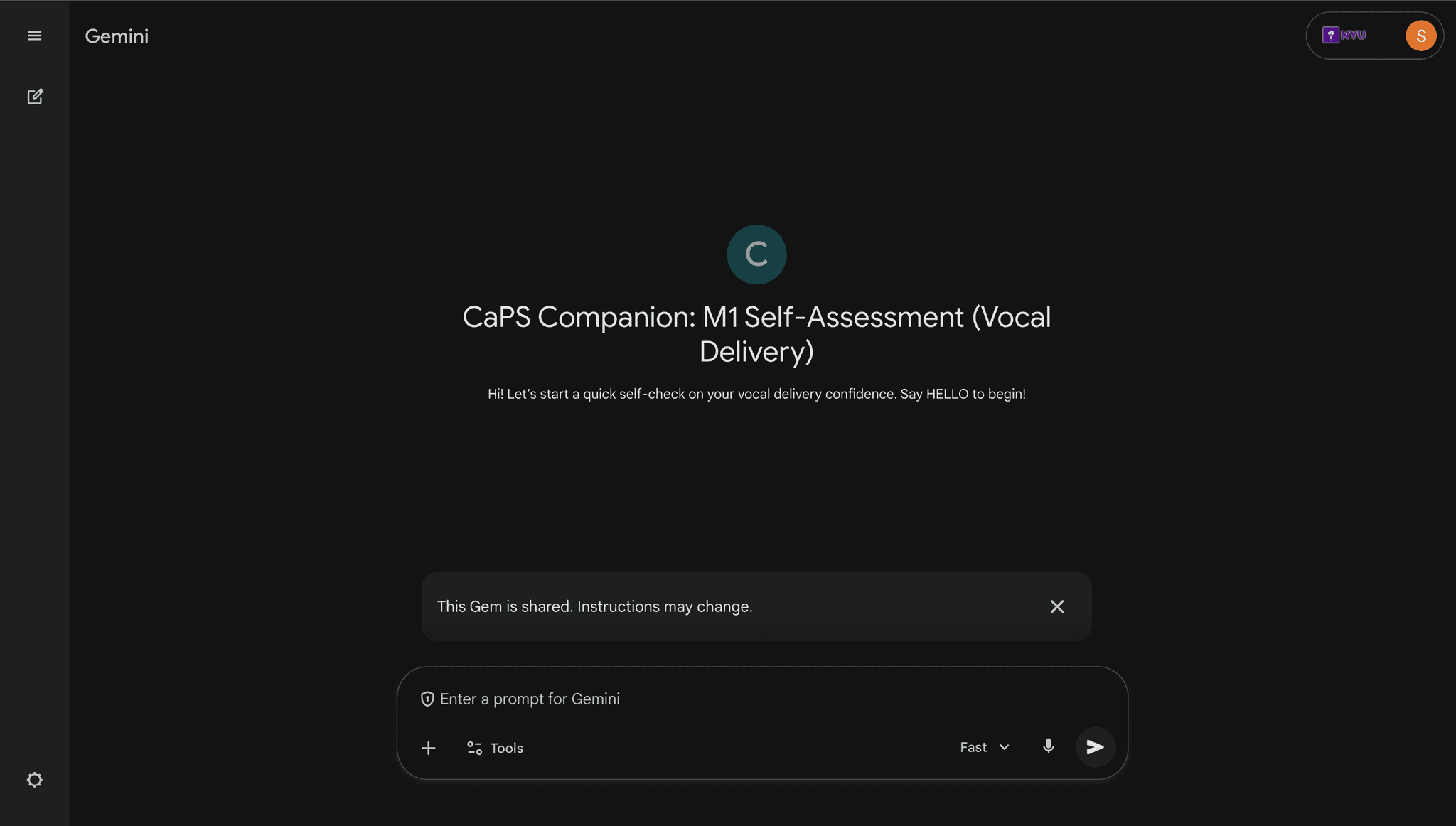

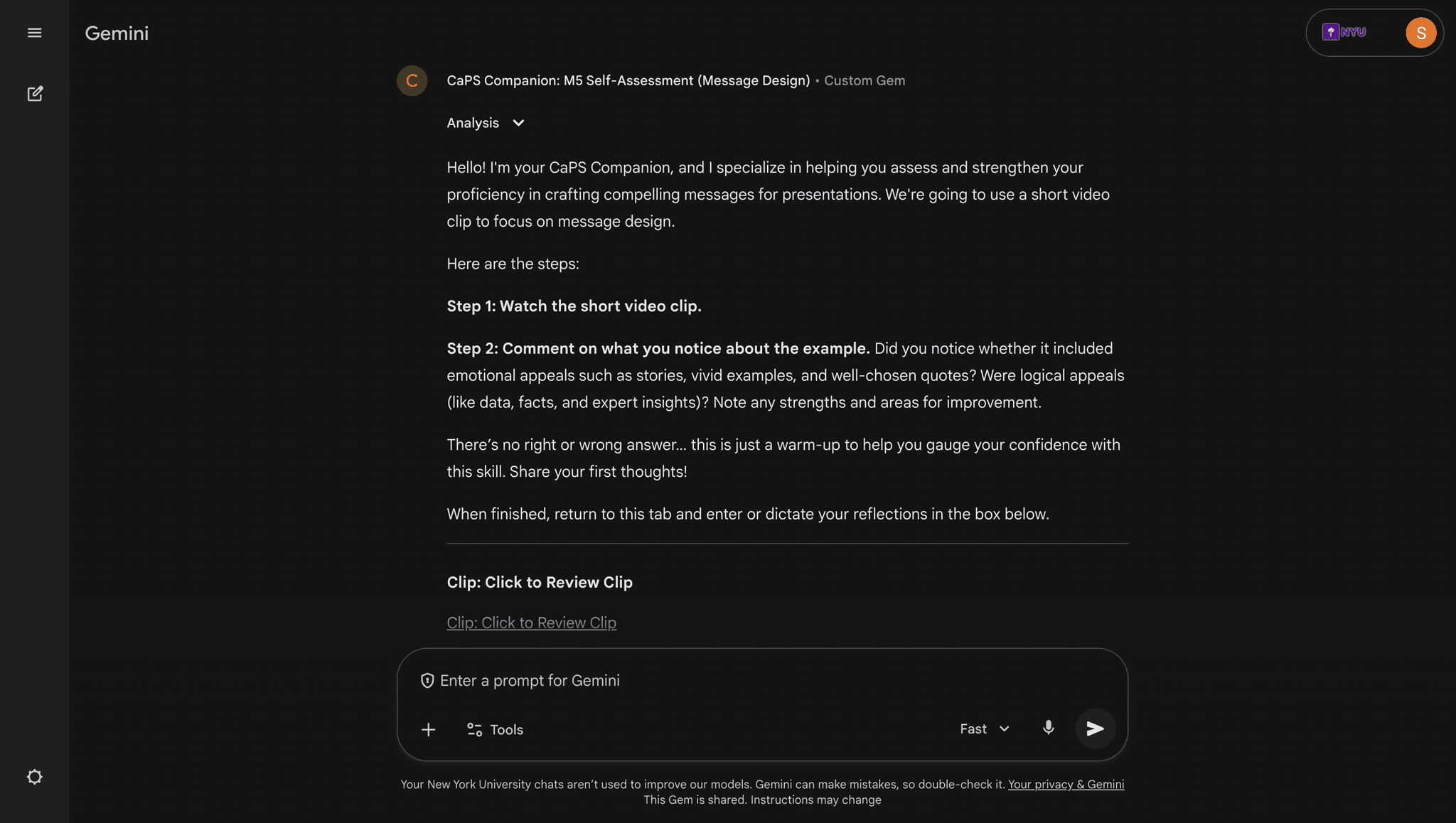

Phase 3 introduces clearer module priming, the CaPS Companion AI chatbot, and a new track system that lets students choose between Peer Review or Learn & Practice pathways. This version focuses on greater personalization, adaptive guidance, and a smoother, more flexible learning experience. There is no longer a skills mapping. The program continues to be hosted on brightspace and its new structure is actively being observed as it is in session.

Peer Reviewing Group Track

The Peer Reviewing Group track is designed for students who want to strengthen their presentation skills by actively reviewing others’ work. In this track, students watch peer submissions, provide rubric-based feedback, and learn by analyzing different presentation approaches.

Learn and Practice

The Learn & Practice track guides students through structured lessons, examples, and quick exercises to help them build presentation skills step-by-step. Students learn core concepts, practice in short bursts, and gradually gain confidence before submitting a full presentation.

The CaPS Companion AI chatbot serves to explain the task for each module to the students with detailed instructions. It prompts students when to provide their insights from the provided sources and gives feedback on the students reflections.

Testing of iterations

Between each version iteration of CAPS, the team observed various self reported feedback reflections from the student cohorts which ultimately informed their future design revisions.

V1 Testing Insights and implications

Students using CaPS showed noticeable improvements in their presentation skills, but still desired aspects of skill mastery and personalization within the program.

Students were able to relate the modules of the program with their existing courses, but students wanted to be able to track their progress of targeted skills they were lacking in. This ultimately led to the design addition of a skills assessment and skills mapping portion in V2.

While the program's V1 successfully was hosted on its own site, the CAPS team decided to transition the platform onto the NYU learning management system, Brightspace. They predicted it would allow for a more familiar user experience for the CAPS students and seamless integration of the Bongo AI feedback.

V1 Site (not on Brightspace)

V2 Testing Insights and implications

While students liked the AI feedback that supported their learning, they discovered that peer feedback was ultimately more personable and actionable.

The SkillsMap was removed because students found it confusing, overwhelming, and disconnected from their real goals. Instead of helping them improve, it created cognitive overload, unclear next steps, and low motivation.

Testing showed students preferred simpler, action-oriented guidance so the SkillsMap was replaced with two clearer pathways (Learn & Practice + Peer Review), giving students immediate value without the complexity of tracking 20+ micro-skills.

Learning Pathways were accompanied by the help of the AI chatbot and a self assessment, allowing students to better understand their own gaps in the skills.

Bongo was removed because students found it unintuitive to use. Uploading videos was slow, the interface felt outdated, and the tool added unnecessary friction to a process that was supposed to feel simple and supportive. It also didn’t offer the flexible, low-stake practice environment students wanted.

“I like the personalized report, especially that it shows my areas of strength and growth.”

“Seeing the skills in action helped me implement them into my presentation.”

“It was nice to hear [guest speaker] say that she fakes it and sometimes gets super sweaty. Very relatable!”

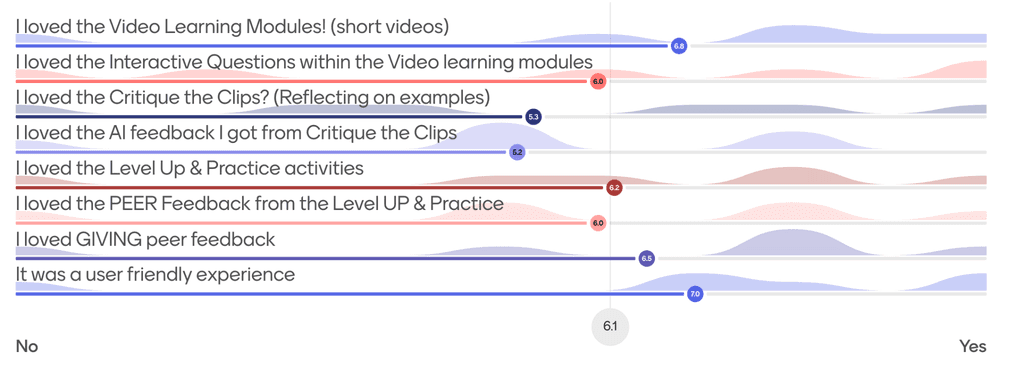

Based on 10 pt scale, rating for CaPS activities and features, 7 participants, May 2025

Impact and findings

Overall, CaPS has successfully created a psychologically safe learning space where students could experiment, iterate, and build long term communication habits, validating the program’s importance and informing ongoing refinements for future cohorts.

Behavioral Changes Observed

CaPS has shown meaningful improvements in how students approach, practice, and reflect on their presentation and communication skills. Students reported feeling more confident, supported, and self-aware throughout the program. The shift to a more flexible, low-stakes, self-directed structure helped reduce anxiety and increased students’ willingness to practice consistently.

Analytics from the AI tools such as Bongo and qualitative feedback from self reported reflections and rating scales revealed that students valued personalized guidance, especially when AI feedback was paired with clear rubrics and peer input. The new Brightspace versions improved usability, leading to higher engagement and more frequent submissions.

Potential Next Steps for CAPS

With the newly integrated chatbot in the V3 of CAPS, it will be important for the CAPS team to assess its impact. The CAPS team may continue to refining the automated feedback to be more context-aware, discipline-specific, and supportive rather than corrective.

In addition, the CAPS team will observe the newly implemented track structure within V3 to see if guided pathways truly do personalize student progression.

Finally, because CAPS serves to improve communication and presentation skills, there is potential for the program to expand outside of ECT.

MY Reflection

Working on this case study taught me that designing for a program is fundamentally different, and possibly more challenging than designing for a single product. Instead of documenting my own design decisions, I had to dig into another team’s process, interpret their choices, and piece together a cohesive narrative from primary research, interviews, and scattered artifacts such as notion notes and slides. This experience pushed me to become a more thoughtful researcher, a better listener, a clearer storyteller, and ultimately strengthened my ability to translate complex, collaborative design work into an accessible and meaningful case study. I learned that it is more important to encapsulate the main ideas rather than writing down detailed paragraphs about every aspect of the program because in the end the case study must be digestible for others to understand!

AI disclosure

In developing this case study, I used the AI assistant of Gemini as an editor and thinking aid for designing a case study around a large amount of information.

I specifically asked Gemini to synthesize my primary research notes and meeting recording into a structured format for my case study. I then summarized the sources of the provided slides, modules, and notion notes, to the AI to help fill out the sections of the outline.

As the AI created a case study outline based on the assignment description and my notes, I wrote my own paragraphs for each section and asked the AI to refine its phrasing, check its grammar, and improve its overall clarity.

The AI did not fabricate any sources or design decisions outlined in this case study. All the AI outputs and analyses were reviewed by me as the author.

Sources

Bouwmeester, M., Ma, Y. (2025, May). CAPS! A Human + AI Approach to Scalable, High-Impact Presentation Skill Development [Google slides]. https://docs.google.com/presentation/d/1cnkWenzBPsJRdQ4a9qzoeXHWuvxpTiMrjPw4OMnZ-pY/edit?slide=id.g35c72d5bdf5_0_0#slide=id.g35c72d5bdf5_0_0

Bouwmeester, M., Ochoa, X., Li, E. (2024, June, 19). Powering Pedagogy:

Leveraging Learning Analytics and AI for Presentation Skill Development in Higher Education [Google slides]. https://docs.google.com/presentation/d/1cnkWenzBPsJRdQ4a9qzoeXHWuvxpTiMrjPw4OMnZ-pY/edit?slide=id.g35c72d5bdf5_0_0#slide=id.g35c72d5bdf5_0_0

Bouwmeester, M., Ochoa, X., Ma, Y., Cheng, Z., Huang, C., Li, E., & Lee, E. (n.d.). CaPS!: A Scalable Approach to Enhancing Presentation Skills (p. 3) [Review of CaPS!: A Scalable Approach to Enhancing Presentation Skills]. International Society of the Learning Sciences. https://drive.google.com/file/d/1ECMyjfIk-_IOQN86I_Idxe8ucgUYyW23/view

OpenOPAF: An Open Source Multimodal System for Automated Feedback for Oral Presentations - Scientific Figure on ResearchGate. Available from: https://www.researchgate.net/figure/OpenOPAF-core-hardware-components_fig2_385704313 [accessed 22 Nov 2025]

Problem Space Image: https://engineering.nyu.edu/sites/default/files/styles/content_header_620_2x/public/2025-03/240925-Experiential-Scholars-SON03851-sm_0.jpg?h=56d0ca2e&itok=OjFZklVl